CoDe Lab @ 17th ICCWAMTIP

Connected Devices Lab in December 2020, participated and presented three accepted papers the 2020 17th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP). The conference was held at the University of Electronic Science and Technology of China campus in Chengdu, China. The following were the papers presented at the conference: GAN-Based Synthetic Gastrointestinal Image Generation, recognising emotions from texts using a BERT-based approach and a comparative analyses of BERT, RoBERTa, DistilBERT, and XLNet for text-based emotion recognition. Below are the abstracts of the presented papers.

GAN-Based Synthetic Gastrointestinal Image Generation

As with several medical image analysis tasks based on deep learning, gastrointestinal image analysis is plagued with data scarcity, privacy concerns and insufficient number of pathology samples. This paper frames the task of generating new and plausible samples of oesophageal cancer images as a picture-to-image translation task. We evaluate two adversarially trained fully convolutional network architectures to generate synthetic diseased gastrointestinal images from segmentation maps prepared by expert clinicians. The synthetic images are evaluated both qualitatively and quantitatively and the experimental results indicate that they are comparable to the original images. This potentially addresses the challenge of data scarcity and the need for heavy data augmentation in automated gastrointestinal image analysis.

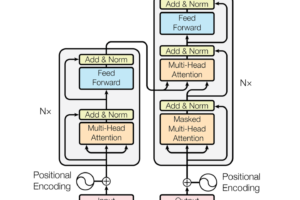

Recognising Emotions from Texts using a BERT-based Approach

The popularity of using pre-trained models is as a result of the training ease and superior accuracy achieved in relatively shorter periods. The paper analyses the efficacy of utilising transformer encoders on the ISEAR dataset for detecting

emotions (i.e., anger, disgust, sadness, fear, joy, shame, and guilt). To achieve this, a two-stage architecture is proposed. The first stage has the Bidirectional Encoder Representations from Transformers (BERT) model which outputs into the second stage consisting of a Bi-LSTM classifier for predicting their emotion

classes accordingly. The results, outperforming that of the state-of-the-art, with a higher weighted average F1 score of 0.73, thus becomes the new state-of-the-art in detecting emotions on the ISEAR dataset.

Recognising Emotions from Texts using a BERT-based Approach

Transformers’ feat is attributed to its better language understanding abilities to achieve the state-of-the-art results in medicine, education, and other major NLP tasks. This paper analyses the efficacy of BERT, RoBERTa, DistilBERT, and XLNet pre-trained transformer models in recognising emotions from texts. The paper undertakes this by analysing each candidate model’s output compared with the remaining candidate models. The implemented models are fine-tuned on the ISEAR

data to distinguish emotions into anger, disgust, sadness, fear, joy, shame, and guilt. Using the same hyper-parameters, the recorded model accuracies in decreasing order are 0.7431, 0.7299, 0.7009, 0.6693 for RoBERTa, XLNet, BERT, and DistilBERT, respectively.