The Emergence of the Age of AI

As stated in my previous article, I want to show next where AI is taking us in the future. However, I need to describe first how AI has evolved during its short life. I have written three articles that develop this theme. In this first article, I briefly outline the background context with what has been achieved up until the start of the millennium. In the next article, I describe the impact that machine learning paradigms such as genetic algorithms and neural networks have made in the last 20 years. Finally, in the third article, I outline our future in a world dominated by AI.

The emergence of Artificial intelligence (AI) has led to applications which are now having a profound impact on our lives. This is a technology which is barely 60 years old. Indeed, the term AI was first coined at the Dartmouth conference in 1956. This was a time when the first digital computers were beginning to appear in university laboratories. The participants at this conference were predominantly mathematicians and computer scientists, many of whom were interested in theorem proving and algorithms that could be tested on these machines. There was much optimism at this conference, for they had been given some encouragement from early successes in this field. This led to euphoric predictions about AI that were overhyped. The thinking at the time was that if computers could solve problems that humans find hard, such as mathematical theorem proving, then it should be possible to get computers to solve easy problems for us. However, it proved not to be the case. The reason why hyped-up optimism prevailed at this time is that problems that humans find hard can turn out to be easy for computers and vice-versa. Perhaps this was not surprising because computers work in the language of mathematical logic and therefore, could be expected to perform better than humans in precise logic problems.

Trenchard More, John McCarthy, Marvin Misky, Oliver Selfridge and Ray Solomonoff / Photographer: Joe Mchling / Source: The Dartmouth College Artificial Intelligence Conference,â€The Next Fifty Years.†AI Magazine

During the next fifty years or so, progress has been sometimes erratic and unpredictable because AI is a multidisciplinary field which is not (at the present time) underpinned by any strong theories. AI software paradigms and techniques have emerged from theories in Cognitive Science, Psychology, Logic, and so on, but had not matured sufficiently – partly because of the experimental foundation upon which they were based, and partly on inadequately powerful hardware. AI programs require more powerful hardware in speed of operation and memory than conventional software. Moreover, the emergence of other technologies – such as the Internet – has impacted the evolution of AI systems. Thirty years ago, it was assumed that AI systems would become stand-alone systems, such as robots, or expert systems. But most of today’s AI applications combine technologies. For example, the route finder applications, such as those used in SatNav road navigational devices, would combine satellite navigational technology with AI logic. At a more advanced level, driverless cars – combine AI deep neural networks, with Global Positioning System technology, and advanced vision technology. In other applications like Google’s language translation program – called Google Translate – machine learning techniques (deep neural networks) are combined with large databases and Internet technologies.

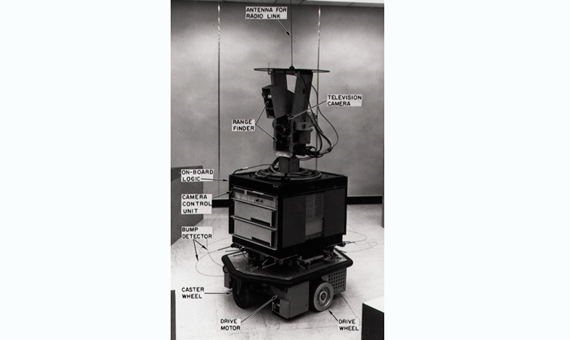

Fig.1. SRI’s Shakey, the first mobile robot that could make decisions about how to move in its surroundings / Source: SRI International

The shifting focus of AI research

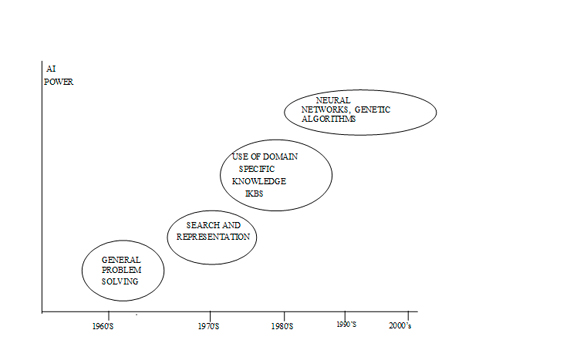

The AI field has seen a shifting focus of AI research over the last 60 years  The first phase was triggered during the Dartmouth Conference and focussed on techniques involving General Problem Solving (GPS) (Newell and Simon 1971). This approach assumed that any problem that could be written in program code – be it mathematical theorem proving, chess playing, or finding the shortest distance from one city to another – could be solved. Such problems would normally involve representing this knowledge in computer readable format and then searching through possible states until a solution is found. For example, in chess playing there would be a symbolic representation of the board, the pieces, possible moves, and best moves based on heuristics of previous tournaments, and so on. During a game, the search would find the best move. However, despite showing good promise initially, the GPS approach run out of steam fairly quickly. The main reason is that the number of search combinations increase exponentially as problems increased in size. Thus, the second phase of research looked at ways to facilitate searching – to reduce or prune the search space, and also ways of representing knowledge in AI. There were some research successes of AI during this period. Most notable were Shrdlu (Winnograd 1972) and Shakey the robot.

However, AI was about to take a step backwards when the Lighthill report, published in the UK in 1973, was very negative about the practical benefits of AI. There were similar misgivings about AI in the US and the rest of the world. However, recognising the possible benefits of AI, the Japanese gave it a new lease of life in 1982 with the announcement of a massive project – called the Fifth Generation Computer Systems project (FGCS). This project was very extensive covering both hardware and software that included intelligent software environments and 5th generation parallel processing, amongst other things. This project served as a catalyst for interest in AI in the rest of the world. In the USA, Europe and the UK there was a move towards building Intelligent Knowledge Based Systems (IKBS) – such systems were also called expert systems. The catalyst for this activity in the UK was the ALVEY project. This was a large collaborative project funded by the UK government and industry and commerce that looked at the viability of using IKBS in more than 200 demonstrator systems.

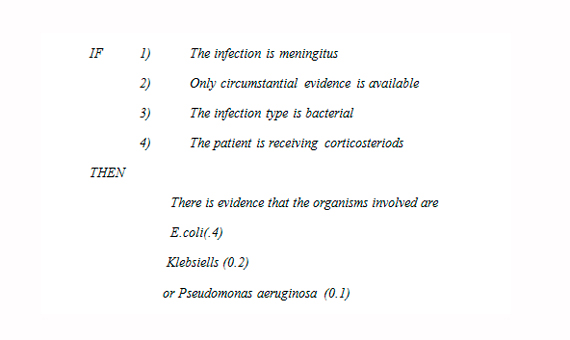

Fig.2. Typical Rule Taken from the Medical Expert System Mycin / Source: author

This paved the way for a third phase of AI research concentrating on IKBS which, unlike the GPS universal knowledge approach, relied upon specific domain-based knowledge to solve AI problems. With IKBS, a problem, such as medically diagnosing an infectious disease, could be solved by incorporating into the IKBS the domain knowledge for that problem. Such knowledge could be acquired from human experts in this domain or by some other means. This knowledge would often be written in the form of rules. Fig.2 shows a typical rule taken from the medical expert system called MYCIN. The collection of rules and facts making up this knowledge was called a knowledge-base. A software inference engine would then use that knowledge to draw conclusions. IKBS made quite an impact at the time and many of these systems such as R1, MYCIN, Prospector, and many more, were, and still are in some cases, being used commercially (Darlington 2000).

However, there were some shortcomings with IKBS: these were their inability to learn and, in some cases, the perceived narrowness of their focus. The ability to learn is important because IKBS need regular updating. Doing this manually is time consuming. AI Machine learning techniques have now matured to enable systems to learn unaided with little, or no, human intervention. IKBS systems had a narrow focus because, they did not have the “common sense†knowledge possessed by a human expert to draw upon. This meant that many of these systems were very competent at solving problems within the narrow confines of their domain knowledge, but crashed when confronted with an unusual problem that required them to use common sense knowledge. AI experts realised that expert systems were lacking common sense which we humans acquire from the day we were born. This was a severe impediment to the success of AI because they were seen as brittle.

For this reason, a number of projects have been developed with a view to resolving this problem. The first was CYC (Lenat & Guha 1991). This was a very ambitious AI project that attempted to represent common-sense knowledge explicitly by assembling an ontology of familiar common sense concepts, The purpose of CYC was to enable AI applications to perform human-like common sense reasoning. However, there were shortcomings identified with the CYC project – not least in dealing with the ambiguities of human language. Other more recent approaches have drawn upon the “big data†approach, sometimes using an open source model for data capture on the Web. For example, ConceptNet captures common sense knowledge containing lots of things computers should know about the world by enabling users to input knowledge in a suitable form.

During the last few decades, Machine learning has become a very important research topic in AI It is mostly implemented using techniques like neural networks and genetic algorithms. This represents the fourth phase of AI research described in Fig.3, and I will look at their impact in my next article.

Keith Darlington

References

Darlington, K. The Essence of Expert Systems. Pearson Education, 2000.

Lenat, D. and Guha, D. Building Large Knowledge Based Systems. Addison Wesley. 1991.

Winograd, T. Â Understanding Natural Language, Academic Press, 1972

Newall, A., & Simon, H. A. Human problem solving. Englewood Cliffs, N. J.: Prentice-Hall, 1971.

Source: OpenMind